“Salt” resembles many science-fiction films from the ’70s and early ’80s, complete with 35mm footage of space freighters and moody alien landscapes. But while it looks like a throwback, the way it was created points to what could be a new frontier for making movies.

“Salt” is the brainchild of Fabian Stelzer. He’s not a filmmaker, but for the last few months he’s been largely relying on artificial intelligence tools to create this series of short films, which he releases roughly every few weeks on Twitter.

Stelzer creates images with image-generation tools such as Stable Diffusion, Midjourney and DALL-E 2. He makes voices mostly using AI voice generation tools such as Synthesia or Murf. And he uses GPT-3, a text-generator, to help with the script writing.

There’s an element of audience participation, too. After each new installment, viewers can vote on what should happen next. Stelzer takes the results of these polls and incorporates them into the plot of future films, which he can spin up more quickly than a traditional filmmaker might since he’s using these AI tools.

“In my little home office studio I can make a ’70s sci-fi movie if I want to,” Stelzer, who lives in Berlin, said in an interview with CNN Business from that studio. “And actually I can do more than a sci-fi movie. I can think about, ‘What’s the movie in this paradigm, where execution is as easy as an idea?'”

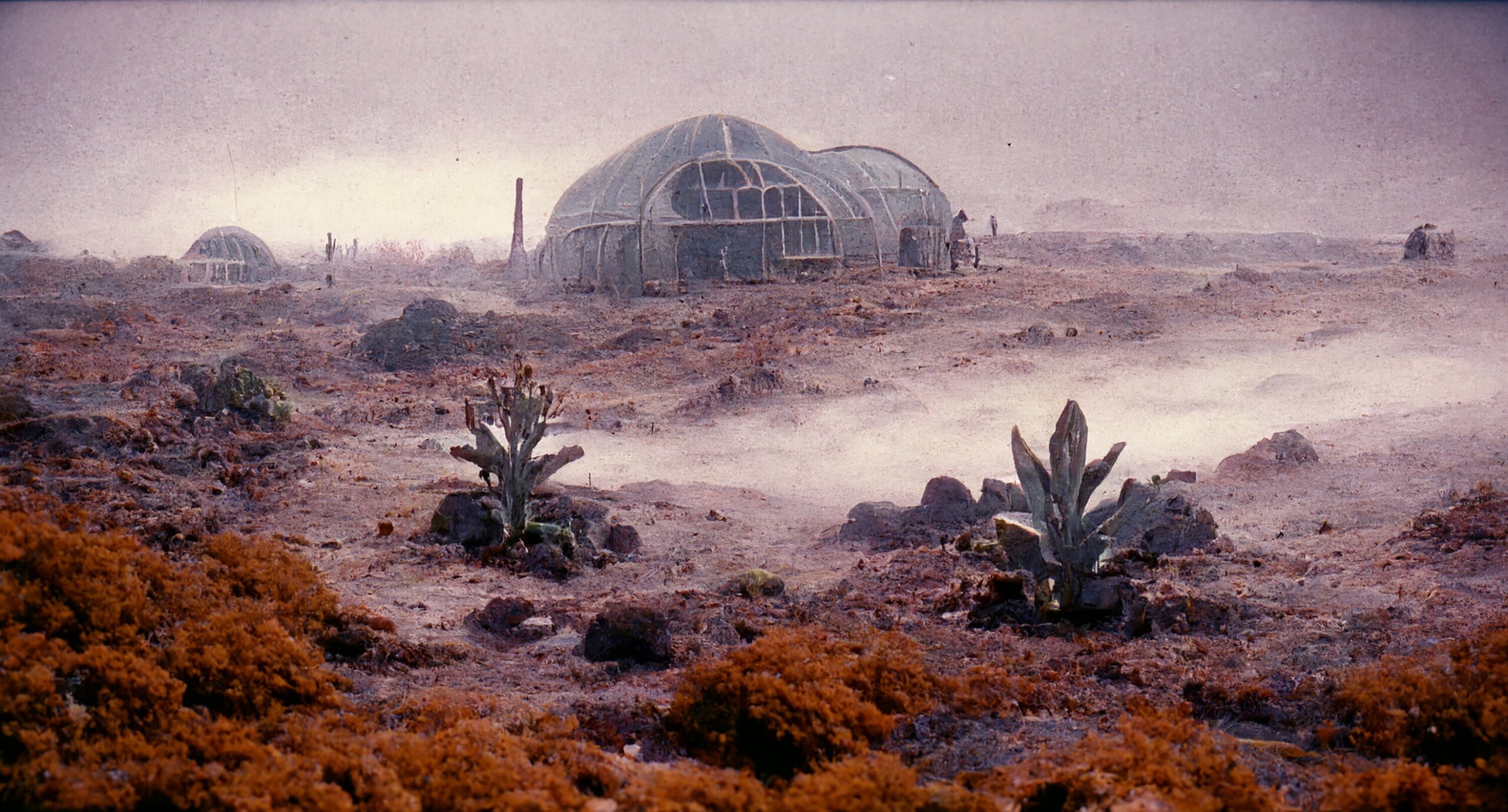

The plot is, at least for now, still vague. As the trailer shows, it generally focuses on a distant planet, Kaplan 3, where an overabundance of what initially appears to be mineral salt leads to perilous situations, such as somehow endangering a nearing spaceship. To make things more confusing (and intriguing), there are also different narrative threads introduced and, perhaps, even some temporal anomalies.

The resulting films are beautiful, mysterious, and ominous. So far, each film is less than two minutes long, in keeping with Twitter’s maximum video length of two minutes and 20 seconds. Occasionally, Stelzer will tweet a still image and a caption that contribute to the series’ strange, otherworldly mythology.

Just as AI image generators have already unnerved some artists, Stelzer’s experiment offers an early example of how disruptive AI systems could be to moviemaking. As AI tools that can produce images, text, and voices are becoming more powerful and accessible, it could change how we think about idea generation and execution — challenging what it means to create and be a creator. Although the following for these videos is limited, some in the tech space are watching closely and expect more to come.

“Right now it’s in an embryonic stage, but I have a whole range of ideas of where I want to take this,” Stelzer said.

“Shadows of ideas and story seeds”

The idea for “Salt” emerged from Stelzer’s experiments with Midjourney, a powerful, publicly available AI system that users can feed a text prompt and get an image in response. The prompts he fed the system generated images that he said “felt like a film world,” depicting things like alien vegetation, a mysterious figure lurking in the shadows, and a weird-looking research station on an arid mining planet. One image included what appeared to be salt crystals, he said.

“I saw this in front of me and was like, ‘Okay, I don’t know what’s happening in this world, but I know there’s lots of stories, interesting stuff,'” he said. “I saw narrative shades and shadows of ideas and story seeds.”

Stelzer has a background in AI: He co-founded a company called EyeQuant in 2009 that was sold in 2018. But he doesn’t know much about making films, so he started teaching himself with software and created a “Salt” trailer, which he tweeted on June 14 with no written introduction. (The tweet did include a salt-shaker emoji, however.)

That was followed by what Stelzer calls the first episode a couple days later. He’s put out several so far, along with numerous still images and some brief film clips. Eventually, he hopes to cut the pieces of “Salt” into one feature-length film, he said, and he’s building a related company to make films with AI. He said it takes about half a day to make each film.

The vintage sci-fi vibe is partly an homage to a genre Stelzer loves and partly a necessity due to the technical limits of AI image generators, which are still not great at producing images with high-fidelity textures. To get AI to generate the images, he crafts prompts that include phrases like “a sci-fi research outpost near a mining cave,” “35mm footage,” “dark and beige atmosphere,” and “salt crusts on the wall.”

The look of the film is also fitting for Stelzer’s editing style as an amateur auteur. Because he’s using AI to generate still images for “Salt,” Stelzer uses some simple techniques to make the scenes feel animated, like jiggling portions of an image to make it appear to move or zooming in and out. It’s crude, but effective.

“Salt” goes to college

“Salt” has a small but charmed following online. As of Wednesday, the Twitter account for the film series had roughly 4,500 followers. Some of them have asked Stelzer to show them how he’s making his films, he said.

Savannah Niles, director of product and design at AR and VR experience builder Magnopus, has been following along with “Salt” on Twitter and said she sees it as a prototype of the future of storytelling — when people actively participate and contribute to a narrative that AI helps build. She hopes that tools like those Stelzer uses can eventually make it cheaper and faster to produce films, which today can involve hundreds of people, take several years, and cost millions of dollars.

“I think that there will be a lot of these coming up, which is exciting,” she said.

It’s also being used as a teaching aid. David Gunkel, a professor at Northern Illinois University who has been watching the films via Twitter, said he’s previously used a short sci-fi film called “Sunspring” to teach his students about computational creativity. Released in 2016 and starring “Silicon Valley” actor Thomas Middleditch, it’s thought to be the first film that used AI to write its script. Now, he’s planning to use “Salt” in his fall-semester communication technology classes, he said.

“It does create a world you feel engaged in, immersed in,” he said. “I just want to see more of what’s possible, and what will come out of this.”

Stelzer said he has a “somewhat cohesive” idea of what the overall narrative structure of “Salt” will be, but he isn’t sure he wants to reveal it — in part because the community involvement has already made the story deviate in some ways from what he had planned.

“I’m actually not sure whether the story I have in my mind will play out like that,” he said. “And the charm of the experiment to me, intellectually, is driven by the curiosity to see what I as the creator and the community can come up with together.”