For all the latest developments in Congress, follow WTOP Capitol Hill Correspondent Mitchell Miller at Today on the Hill.

A bipartisan bill has been introduced in the U.S. Senate to address the type of terrible situation that a high school girl from Texas experienced when she said a fellow student created deepfake images from some of her photos that made it appear she was naked.

A bipartisan bill has been introduced in the U.S. Senate to address the type of terrible situation that a high school girl from Texas experienced when she said a fellow student created deepfake images from some of her photos that made it appear she was naked.

Elliston Berry and her mother came to Capitol Hill this week and talked about what happened to her last fall, when she was a freshman.

Berry said she was in disbelief when she saw the photos, which were posted on Snapchat.

“Once these photos were released, I dreaded school and was anxious to even step foot on campus,” she said.

Her mother, Anna McAdams, said she repeatedly tried to get Snapchat to take down the photos but had no luck.

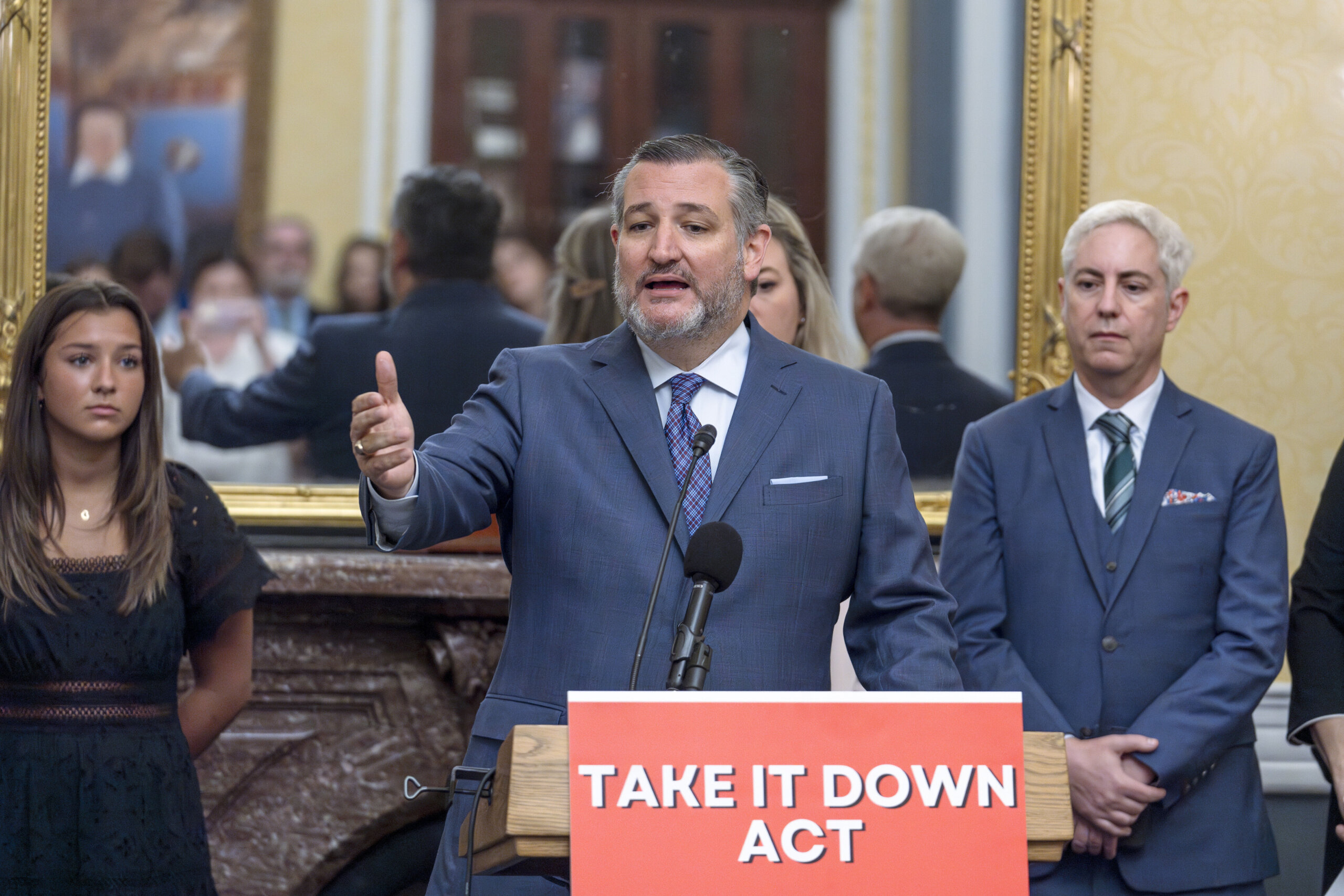

Ultimately, the staff of Texas Sen. Ted Cruz, who’s a co-sponsor of new legislation, intervened and they were removed.

“It should not take an elected member of Congress intervening to have these despicable lies pulled down from online,” Cruz said at a news conference.

The bill Cruz and others are proposing would criminalize publication of nonconsensual intimate imagery, including imagery generated by artificial intelligence (AI).

The legislation is called the Take It Down Act.

“If this garbage is put up against you, if it’s put up against your child, you have a right within 48 hours to force Big Tech to take it down,” Cruz said.

Cruz said up to 95% of deepfake videos depict nonconsensual intimate imagery of women and girls.

“In recent years, we’ve witnessed a stunning increase in exploitative sexual material online, largely due to bad actors taking advantage of newer technologies like generative artificial intelligence. Many women and girls are forever harmed by these crimes, having to live with being victimized again and again,” Cruz said.

Berry, now 15, said she supports efforts to prevent what happened to her from happening to other girls.

“I wouldn’t wish this experience upon anyone and hope to create something that will prevent any student from having to go through this,” she said.

Snapchat has said it does not allow pornographic images on its platform.

Get breaking news and daily headlines delivered to your email inbox by signing up here.

© 2024 WTOP. All Rights Reserved. This website is not intended for users located within the European Economic Area.